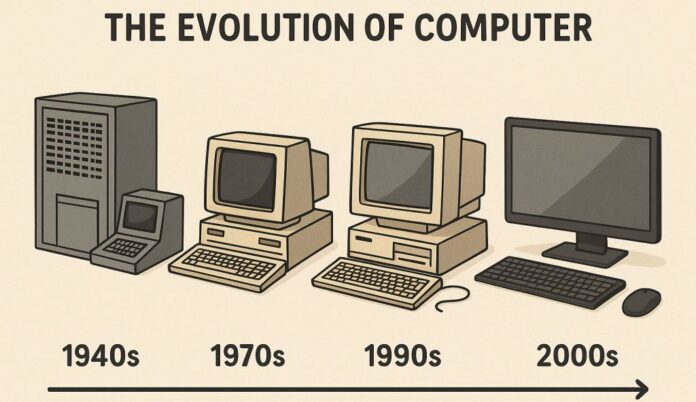

Computers have changed the world in unimaginable ways since their first appearance. They have grown from primitive and heavy-duty machines to advanced small devices integral to our everyday lives. This blog delved into computers’ development, History of computers, and effects, providing essential milestones and describing what the future holds for this ever-changing technology.

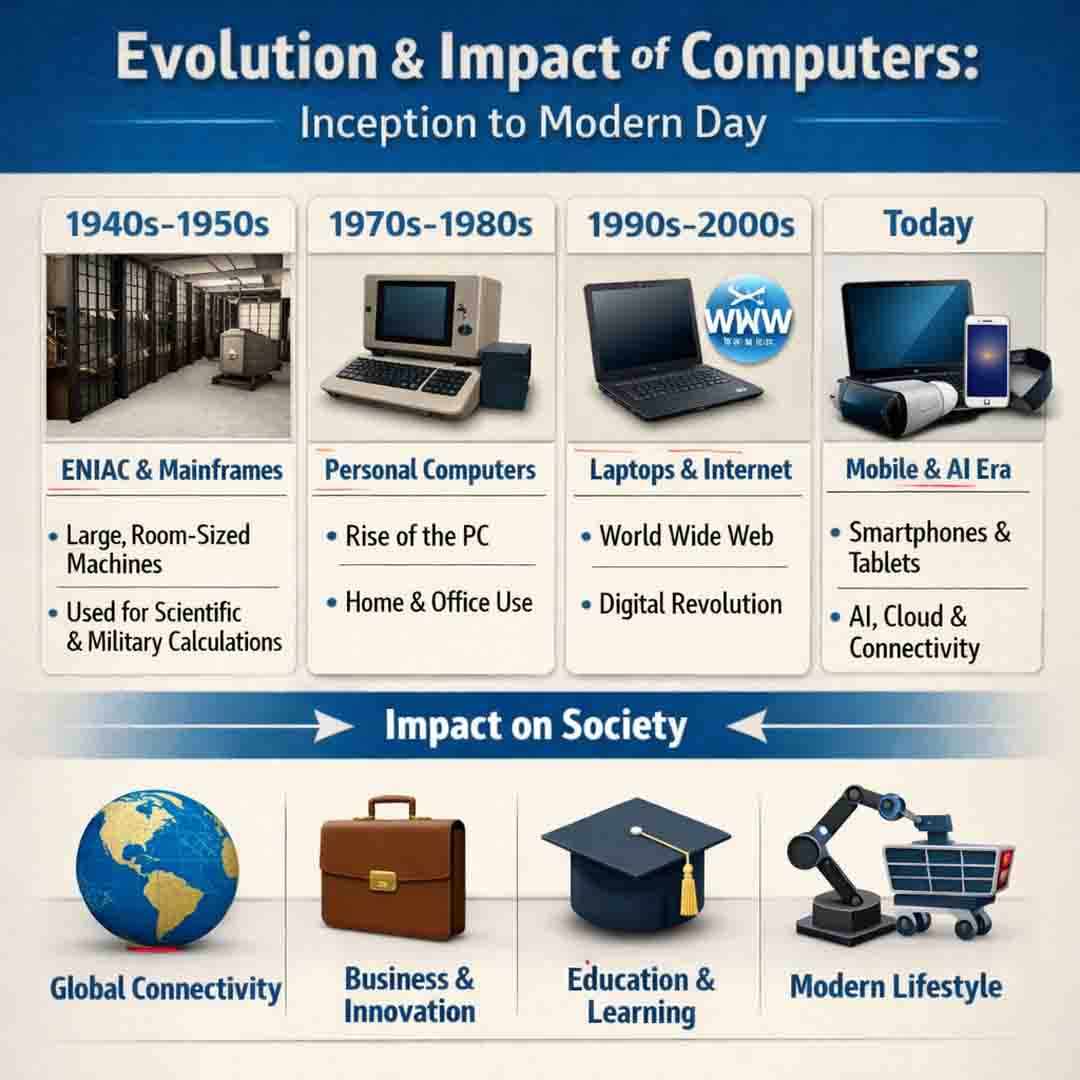

Evolution of computer systems from 1940 to 2025

The Early Beginnings Computer

The idea of a computer can be traced to ancient civilizations – Early computing devices. The abacus, which the Sumerians invented in 2500 BCE, is generally regarded as one of the earliest computer devices. However, the foundation for contemporary computers came later, during the late 19th century, through Charles Babbage and Ada Lovelace.

Charles Babbage and Ada Lovelace

Charles Babbage, an English mathematician, is credited with developing the very first mechanical computer, the Analytical Engine, around 1830. Although it was not completed during his lifetime, it was a design that contained essential components of modern computers. For instance, the mill (processor)and store (memory) in the Analytical Engine are analogous to the CPU and RAM in today’s computers, and the input and output mechanisms laid the foundation for user interaction with computers.

Ada Lovelace, often recognized as the pioneer of computer programming, worked in close collaboration with Babbage. Together, they developed the first algorithm designed for machine processing. Their partnership and shared vision for the potential of computers, which went beyond just calculating to work that involved art and music, is a testament to the power of teamwork in innovation.

The Advent of Electronic Computers

The 20th century was a period of remarkable progress in computing technology, particularly in the aftermath of World War II. A significant milestone was the transition from mechanical to electronic computers, a shift that accelerated the pace of technological advancement.

The ENIAC and UNIVAC – Comparison of old and new computers

The electronic Numerical Integrator and Computer (ENIAC), a machine behemoth, was created around 1945 by John Presper Eckert and John Mauchly and was among the first electronic general-purpose computers. With a weight of around 30 tons and taking up 1 800 square foot, ENIAC could run thousands of calculations every second, which was an achievement in its day.

Following ENIAC, Eckert and Mauchly developed the Universal Automatic Computer (UNIVAC) in 1951, the first commercially available computer. UNIVAC’s success signaled the beginning of the commercial computer industry, making computing power accessible to businesses and governments.

The Transistor Revolution

In the wake of ENIAC, the pioneering work of Mauchly and Eckert continued with the creation of the Universal Automatic Computer (UNIVAC) in 1951. It was the first commercially available computer, a testament to their relentless pursuit of innovation. The success of UNIVAC marked the beginning of the commercial computer industry, making computing power available to governments and companies.

The Microprocessor and Personal Computers

The 1970s and the 1980s were significant turning points in the 1980s when the microprocessor Invention of computers and the personal computer (PCs) were introduced.

The Intel 4004 and IBM PC

It was in 1971 that Intel launched the 4004, the first commercially accessible microprocessor. The tiny chip contained all the parts of the central processing unit (CPU) that allowed the development of smaller and cheaper computers.

The advent of the IBM PC in 1981 revolutionized the field of computers. IBM’s open architecture enabled other companies to create compatible software and hardware, creating a more competitive market. The IBM PC’s success led to the emergence of personal computer systems, changing these devices from business tools to everyday necessities.

The Internet and the Digital Age

The growth of the Internet in the latter half of the 20th century also increased the power of computers. The Internet was born from ARPANET, a program financed through the U.S. Department of Defense in the 1960s. In the 1960s, the Internet became a worldwide network connecting thousands of computers.

The World Wide Web

In 1989, British researcher Tim Berners-Lee created the World Wide Web, making the Internet accessible to everyone. The Web created a user-friendly interface, allowing users to access, share, and even create information quickly. The subsequent Technological evolution of computers of web-based browsers, like Mosaic and Netscape, has further boosted the Internet’s popularity, leading to the digital age.

Importance of computers in the modern era

Today’s computers are superior to previous models, with phenomenal processing power, miniaturization, and connectivity.

Mobile Computing and the Cloud

The rise of tablets and smartphones has made computing omnipresent. Modern mobile devices are outfitted with powerful processors, large-resolution screens, and a range of sensors that allow for a broad array of applications, including entertainment, communication, and productivity.

Cloud computing has further changed the computing world, allowing users to store and access their data and applications on the Internet. Services such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform provide scalable computing resources, which allows companies to develop and function effectively.

The rise of artificial intelligence in computing (AI) and machine learning (ML) have been at the nexus of computing technology today. These tools allow computers to glean information from data, identify patterns, and make choices that drive advances in finance, healthcare, and autonomous systems.

AI-powered applications, like virtual assistants (e.g., Siri, Alexa) as well as suggestion technology (e.g., Netflix, Amazon), as well as autonomous vehicles (e.g., Tesla) are great examples of the transformational potential of these technologies.

The Impact of Computers on Society

Computers have profoundly impacted various areas of our society, altering the economy, industries, and Role of computers in daily life.

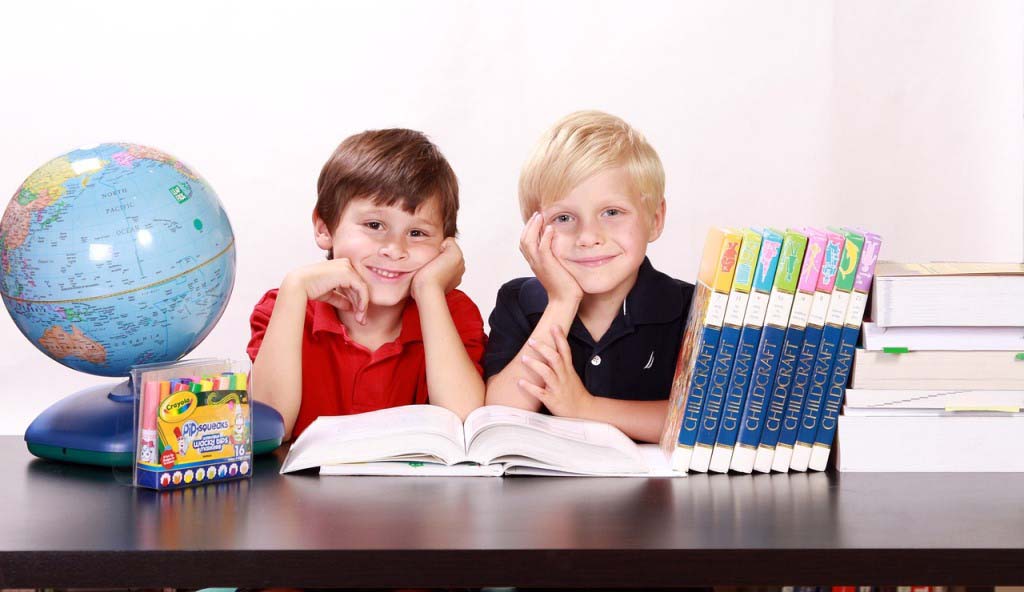

Evolution of computing technology in education and research

Computers have revolutionized the education system by offering access to various sources of information and materials. Online learning platforms, virtual classrooms, and online courses make education more flexible and accessible. For research, computers enable sophisticated data analyses, simulations, and collaboration, accelerating research and Timeline of computer development.

Business and Economy

Computers have increased efficiency, productivity, and technological innovation in business. Data analytics and automation have streamlined processes, and digital marketing and e-commerce have increased the market’s reach. The remote and gig economy, powered by computers and the Internet, provides new possibilities and a greater degree of flexibility for workers.

Computers in healthcare and science

The rise of computers in healthcare has not only transformed the industry but also revolutionized the way we use electronic health records (EHRs) and Telemedicine. Modern computer technology imaging tools, diagnostic tools, and robotic surgery have significantly improved patient care. However, it’s the AI-driven software that truly stands out, aiding in predicting diseases, drug discovery, and providing personalized medical treatment, offering a reassuring glimpse into the future of healthcare.

Entertainment and Impact of computers on communication and business

Thanks to the advent of digital technology, computers are transforming the entertainment world. But it is not just about games and video streaming services. Social media networks, powered by computers, are connecting users across the globe, fostering communication and community, and making us all feel like part of a larger, global village.

Future of computer innovation

The future of computing in healthcare and entertainment is filled with exciting possibilities. New technologies are on the horizon, promising to push the boundaries even further and bring about transformative changes that we can all look forward to.

Quantum Computing

Quantum computing based on the fundamentals of quantum mechanics could solve complex problems beyond conventional computers’ capabilities. Quantum computers can transform fields like material science, cryptography, and optimization.

Internet of Things (IoT)

The Internet of Things (IoT) is a vision of an interconnected network of devices, ranging from smart homes to industrial sensors, allowing seamless communication and automation. IoT can increase efficiency and sustainability and improve the quality of living.

Ethical and Societal Considerations

The transformation of computers from essential mechanical devices to complex interconnected systems has wholly transformed our society. As technology continues to improve, computers will undoubtedly have a more significant impact on shaping the future. Using these advances while solving ethical and social issues is essential to unlock the power of computing technology to its fullest.

Conclusion

In exploring the background advancements, innovations, and impacts of computer technology, you can gain an appreciation for this incredible technology and the profound effect it has on our everyday lives. From the Computer revolution ideas of pioneers such as Charles Babbage and Ada Lovelace to the latest developments of AI and quantum computation, the path of computers is a testimony to the ingenuity of humans and the limitless possibilities of technological advancement.

In exploring the background advancements, innovations, and impacts of computer technology, you can gain an appreciation for this incredible technology and the profound effect it has on our everyday lives. From the revolutionary ideas of pioneers such as Charles Babbage and Ada Lovelace to the latest developments of AI and quantum computation, the path of computers is a testimony to the ingenuity of humans and the limitless possibilities of technological advancement.